Alibaba Group has supported the Olympic and Paralympic Winter Games Milano Cortina 2026 (Milano Cortina 2026) in becoming the most intelligent Games in Olympic history.

This article introduces ACK GIE's precision-mode prefix cache-aware routing that maximizes KV-Cache hit rates for distributed LLM inference.

This article introduces ACK One Fleet's multi-cluster canary release solution, integrated with Kruise Rollout, for safe AI inference deployments across hybrid and geo-distributed clouds.

This article introduces how combining LLM Agents with deterministic Workflows like Argo enables controllable, production-ready AI systems.

We are delighted to announce the official release of Qwen3.5, introducing the open-weight of the first model in the Qwen3.

Qwen App, Alibaba’s consumer-facing AI application, has spurred a behavioral shift toward AI-powered shopping during its Chinese New Year (CNY) campaign.

This article introduces UModel, Alibaba Cloud's ontology that transforms observability into a unified model-driven digital twin of IT systems.

Alibaba Chairman shares his perspective at the World Government Summit 2026 on why full stack companies maintains an advantage as open-source AI providers.

Alibaba Cloud is partnering with OBS and IOC to deploy advanced cloud and AI technologies for the Olympic and Paralympic Winter Games Milano Cortina 2026.

This article introduces a dual memory-pool inference framework enabling efficient hybrid Transformer-Mamba model execution by resolving conflicting caching mechanisms.

This article introduces engineering optimizations to 3FS—KVCache's foundation layer—across performance, productization, and cloud-native management for scalable AI inference.

This article introduces Dify's Nacos A2A plugins, enabling bidirectional agent collaboration—discovering external A2A agents and exposing Dify apps as discoverable agents via Nacos Registry.

The article introduces PolarDB IMCI’s native columnar full-text indexing for efficient, integrated text and hybrid vector search—eliminating the need for external search engines.

At Alibaba Cloud, we're not just delivering technology. We're co-creating a new chapter of AI with the world.

New programs for channel, ISV, and service partners to accelerate AI adoption, service transformation, and SMB growth

Apakah kamu berencana melakukan deployment Large Language Model (LLM) tapi nggak tahu berapa GPU memory yang dibutuhkan? atau model AI yang kamu gunak...

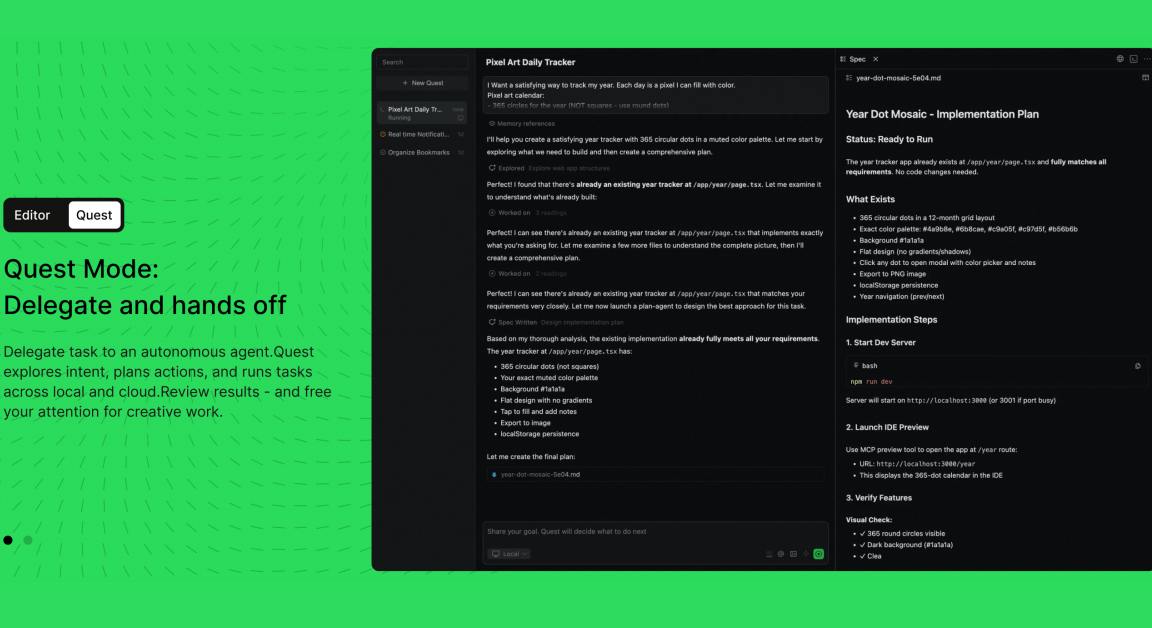

The release of Quest 1.0—an autonomous agent capable of self-learning and rapid evolution was unveiled last week.

This article introduces AI Context Engineering, a framework on Alibaba Cloud's AnalyticDB that prevents AI agents from "getting dumber" by intelligently managing their context and memory.

The article introduces the Ralph Loop—a continuous, self-iterating paradigm that keeps AI programming agents working until tasks are verifiably complete.

The article outlines how container technology is advancing to support LLMs and AI agents across data processing, training, inference, and deployment.